AIM 2023 is basically done at this point and I am about as happy with how the compo went as I am relieved that it's over. As much as I enjoy hosting and judging, it is very time-consuming - in fact, I have numbers to show for the amount of time that was spent on the review part alone. But I felt like for this compo, I was more familiar with all the entries than I have ever been in any previous AIM. Most AIMs, I would maybe listen to entries a few times on a whim. This AIM, I probably listened to every entry at least 10 times in their entirety, if not more.

The biggest feat I managed to accomplish this AIM was reviewing every approved entry - yes, all 82 of them. Past AIMs, I'd be fortunate enough to get maybe 30 reviews if I pushed really hard. This time around, I managed to do 82 in the span of a month-and-a-half and it wasn't as difficult as I thought. I also made some behind-the-scene changes, mainly to how I managed compo data and approached review writing. At my current job, I learned how to use some productivity software and basic databases and saw a way to try and incorporate these as a part of the AIM process, and I wanted to share a bit of how that happened in this post. But first, let's look at some entries that I think need more love.

AIM 2023 Favourites

I want to mention a few AIM entries that I thought were great or felt like a showcase of the composer's improvements compared to previous AIMs.

Honourable Mentions

In no particular order, here are some entries I love from this year:

Environments 2D by @Cresince

Honestly probably the entry I'm most disappointed didn't get into the top 20. I love the groove and the sound design is fantastic.

ACID RAIN by @Dry

Those guitar chords perfectly encapsulate a rainy scene. I love the panning effects in the melody, too.

sweat by @SamaadBae5

This just manages to be chill and also is surprisingly good music to work out to? At least that's how I felt when I worked and sweated to this tune.

Tower of Tech by @Irish-Soul

This came SO CLOSE to getting into the top 20. The composition in this track is so well-crafted, especially with how the melody develops.

Horizon by @underscore8298

The chord progression and the rising synths at the beginning are so beautiful. Sunvox for the win!

I'm Naut Dreaming by @TheRiskster

I'm here for this romantic, pretty theme about two lovers floating in outer space. This is a perfect piece to listen to if I want to chill at night.

Travel To The Far Side by @DigitalProdigy

When I heard the composer wanted this track to be removed from AIM, I was actually quite disappointed. I love DigitalProdigy's other two entries in AIM, but this one blew me away when I first heard it. I think it does a great job at capturing the rave against the blinding lights.

Winter by @Siberg

This is another former entry that I really loved that got removed and re-added during the judging period. It is probably one of my most replayed tracks when it was still in the compo, really just for that one higher melody line that sores above the driving bassline.

Most Improved

I think these two composers are the ones whose entries I was most impressed by this year.

@Anonymous-Frog - I like "Archdukes of the Machine" a lot. It struck the right balance of horror and musicality and it all worked together well. I don't remember much of your entry from last year, but I feel like this year, you definitely stepped up.

@DiosselMusic submitted two very nice entries this year that I think showed a lot of improvement. Both have great and different vibes.

Earworms

I wanted to shout out these two entries specifically for randomly getting stuck in my head at various points frequently while I was not judging.

Kubarium by @Dynamic0

Final Frontier by @TebyTheCat

Mass Review Writing

In previous AIMs, the biggest obstacle when it came to writing reviews was burnout. It was easier to find motivation right at the start of the judging period for those compos, but after a day or two, it would become tedious to do. From what I've read about people's previous experience with the compo, participants really wanted feedback on their work, a sentiment which I understand and would love to do. I was fortunate in past AIMs to have users who did take the time to review every entry and leave detailed notes on why they evaluated the things they did.

This year, I wanted to make sure I did my part and surprisingly, I didn't burn out during the entire course of writing 82 reviews. Here's what I did differently this time around:

Listening, Always

Pretty much since the approved playlist was created, I immediately started listening to it whenever I could. The best time was when I was on nights when I had to wash the dishes. I wrote out a few notes and even a few reviews just to stay ahead of the curve. This was probably the most optimal thing, although the kicker is that during this time, people could change or remove their entries. Still, the benefits definitely outweighed the drawbacks here and even if people did make changes, I could go into the judging period more familiar with entries that were submitted very early on rather than being completely unfamiliar with 80+ entries by the time the compo ended.

Productivity Platforms

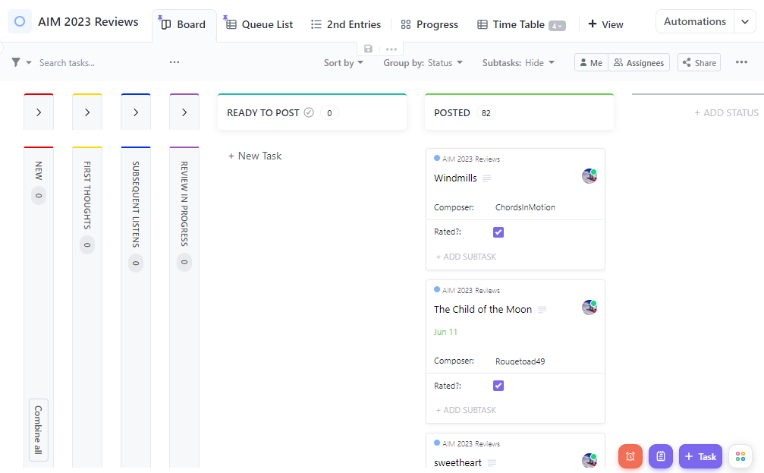

When entries were submitted and approved, I would add them to a ClickUp board and set some statuses to determine stages at which I would listen and evaluate the entries. I believed it was important to have 2 separate listening sessions for every entry in addition to a time to listen and actually write the review. Here's a snippet of what the board looks like (most of the columns are empty now since everything got reviewed):

For me, my mood can sometimes affect the way I listen to certain tracks and I wanted to make sure I wasn't just writing a review influenced by that alone. Doing quick point-form notes, especially at the start when I could just immediately write anything I felt about the track, allowed me to take time later to parse through anything that confused me later on and hone in on the stuff that I was consistently thinking of or bringing up whenever I listened. It was also great because I could put my notes/review in the description while using the comments in the sidebar to copypaste the Author Comments and review them while I listen and write. Not sure how many know it, but I do like getting insight into how composers approach their work and I like to at least factor some of it into the review.

There was actually another big hindrance when it came to writing reviews, just overthinking what to write in regards to the tracks and separating a lot of initial feelings vs. analyzing the way a track makes me feel or how I think the elements of the track really interact to create an experience. Allowing time to let loose then filter really helped me to prepare a lot for the review writing itself.

Was it a bit much? Probably. But this is the only time I've written reviews for every entry in an AIM and this was the one thing that helped me do it, so I would take this is a personal accomplishment that I'm likely going to use for next year's compo.

Time-Tracking Stats

Oh yeah, another thing I did was I tracked time to see how long it took for me to do all the reviews.

- Total time spent on reviewing was 48 hours and 59 minutes. (This includes the note-taking as well as the review-writing itself.)

- Average time spent reviewing/note-taking for each entry: 36 minutes, with the shortest amount being 19 minutes and the longest being 59 minutes.

While I do like being able to write feedback, reviews are very time-consuming to create. I might be able to cut that time down a bit, though some entries give me more trouble than others. The best I can do is show that this is how I perceive the entry and to be more specific in terms of explaining what I feel works and what doesn't work. And I think that's the thing about reviews, especially here. I think they work best when they're seen as insight into that reviewer's perspective on your track.

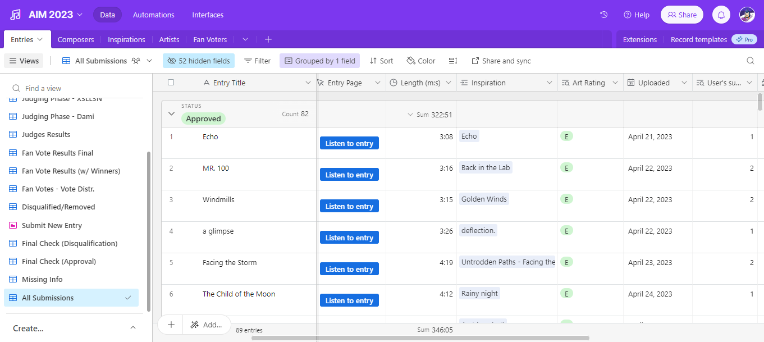

Databases

I also used Airtable this year to keep track of entries as they came in, along with scores and Fan Votes. The thing I liked most about it was that I could format the same data and use it for different purposes within the database. For instance, after inputting the entry name, title and URL, that is one piece of data that supports a ton of different fields, from anything to the composer, the duration, even judge and fan scores. I could use a part of it for the Master List and another for the judges' score sheets. If someone happened to rename their entry, I just had to make one edit and boom, everything is now updated across the board. I also didn't have to worry if a judge decided to sort or move around columns, which was quite nice.

Another thing I liked a lot about this system was that it made it easier to determine stats as I could ensure everything was linked up properly and not worry about misplacing a formula at some point down the line. By linking up Artists to their Inspirations, for example, it was very easy to tell how many of an artist's works were used as inspiration for this particular compo.

Unfortunately, I think I didn't do a great job onboarding judges onto the base. I originally built an Interface to make it easier to focus on the scoring aspect without the rest of the tables getting in the way, but I didn't realize that inviting the judges there would actually just send them to the base instead of the Interface.

Anyway, here's a bit of what that base looks like. For the results, I duplicated this base and removed a lot of the parts that were used to check on entries and their art inspirations.

Next Year

I've thought about some things I'd want to do or try a bit differently for next year. One great thing, however, is that LD-W seems very likely to be a judge for AIM 2024 and that's a big load off my plate. But here are two thoughts I have for next year:

- Some more refinements to the scoring system. This is my second time doing scores for AIM and I think we might need to add something just so there's not so many ties. This could be adding another category for scores (not sure what though) or allowing a precision of 0.1. Or I could come up with a more consistent tiebreaker system. I don't really know yet.

- Distributing reviews among judges. It might help, as reviewing 82 entries really eats away at the time. The downside is that each participant only receives a review from 1, maybe 2 judges. But that seems par for the course compared to previous AIMs. It would certainly be a lot more feasible to get judging done on time, I think. It might also help if certain judges with more experience in reviewing in a particular aspect strongly, that judge might be able to offer perhaps more valuable insight compared to a judge who doesn't have much familiarity with that aspect.

But other than that, I'm tired.

I love being a part of this community and listening to a wide range of entries. It really makes me happy to read Author Comments about how people take time to ensure they make it to AIM and I'm appreciative of you all for sharing your music with me and with everyone else.

While I will continue to help out with getting the AIM 2023 album ready, I feel like I need a moment to focus on other aspects of my life that aren't AIM-related. I am going on a short trip at the end of the month, so I do have that to look forward to. I also really need to work on my sleep schedule because it absolutely sucks. I've made a habit of going out to the gym every morning at 6 AM but I still only get like 2-5 hours of sleep on workdays. Coffee can only help so much.

Thank you all again for being a part of AIM and making the contest something I get to look forward to every year. 💜

Cresince

Thank you for making AIM something great to look forward to! I appreciate the kind words you had for my entry. It's really impressive to hear you reviewed everyone's songs! <3

Random-storykeeper

Thanks for participating, and keep making great songs!